Discover the diverse ways Machine Learning (ML) and Artificial Intelligence (AI) are applied to real-world problems! This comprehensive glossary features more than 20 applications that are changing the way the world works! Whether you’re an AI enthusiast or just beginning to venture into the realm of Machine Learning, this glossary serves as a valuable resource for expanding your understanding and deepening your knowledge of practical applications.

To present a clear and holistic view of these applications, we’ve organized the terms into related categories. Additionally, we’ve included links between different terms, allowing you to seamlessly navigate the interconnected world of AI and ML.

Psst. If you are confused about the difference between AI and ML, you should check out this article, which explains the difference in the beginning!

Don’t forget to explore our other glossaries, diving into the vast range of AI and ML subdomains:

- Machine Learning and Artificial Intelligence Glossary

- Supervised Learning Glossary

- Unsupervised Learning Glossary

- Reinforcement Learning Glossary

- Deep Learning Glossary

- Model Validation and Performance Evaluation Glossary

Without further ado, let’s delve into the fascinating realm of Machine Learning and Artificial Intelligence applications and uncover their incredible potential!

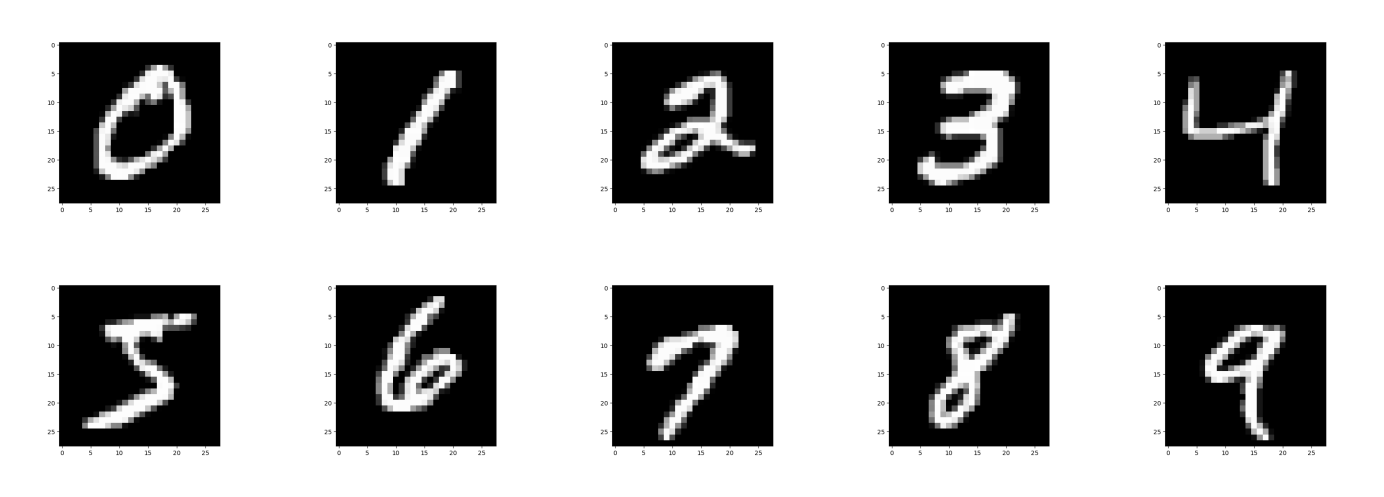

1. Image recognition

Image Recognition is the process by which a machine learning model identifies and categorizes objects, scenes, or activities within a given image or a series of images. It enables computers to understand and interpret visual data similarly to how humans perceive and process images.

The most common approach to Image Recognition is through Convolutional Neural Networks (CNNs), a type of deep learning architecture specifically designed for processing visual data. CNNs consist of multiple layers of interconnected neurons that are organized in a hierarchical manner. Each layer processes small portions of an image, detecting features such as edges, textures, and patterns. As the information passes through subsequent layers, these features are combined to form higher-level representations that help the model recognize and classify the content of the image.

Training a CNN involves using a large, labeled dataset, where each image is tagged with its corresponding class or category. This makes it a type of supervised learning. The model learns to recognize patterns and features unique to each class, ultimately enabling it to classify new, unseen images accurately.

Image Recognition has numerous applications across various industries:

- Automotive: Self-driving cars use Image Recognition to detect objects, pedestrians, and other vehicles, allowing them to navigate safely and autonomously.

- Healthcare: Image Recognition is used to analyze medical images, such as X-rays or MRIs, to assist in the diagnosis of diseases, detect anomalies, and track the progression of medical conditions.

- Agriculture: Image Recognition helps monitor crop health, detect pests, and predict yields through the analysis of satellite or drone imagery.

- Social Media: Platforms like Facebook and Instagram use Image Recognition to identify objects and scenes in user-uploaded photos, enabling features like automated tagging, content filtering, and targeted advertising.

- Augmented Reality: Image Recognition is used to identify and track real-world objects, allowing digital content to be overlaid on top of the physical environment for interactive experiences.

- Search Engines: Content-based image retrieval systems use Image Recognition to find and display visually similar images based on user queries or example images.

- Facial recognition

Facial recognition is a special case of image recognition, where the goal is to detect (and possibly identify) faces from images. Facial recognition implementations typically work in a similar manner as other image recognition implementations (CNNs are a typical approach).

Examples, of where facial recognition is used include digital cameras detecting faces to automatically focus on them, smartphone authentication, automated photo tagging on social media platforms, and identifying persons of interest in surveillance footage.

2. Image generation, also known as image synthesis or art generation

Image generation, often referred to as image synthesis, is the process of creating new images, typically through artificial intelligence and machine learning techniques. The generated images can be completely novel, such as a never-before-seen face, or they can be based on certain input parameters, like an image that resembles a description of a scene.

How does it work?

There are several approaches to image generation, but one of the most popular and effective methods is using a type of generative neural network known as a Generative Adversarial Network (GAN).

GANs consist of two parts: a generator and a discriminator. The generator’s job is to create images so convincing that the discriminator can’t tell whether they’re real or fake. The discriminator’s job is to get better at distinguishing real images from the fakes. Through this adversarial process, both the generator and discriminator improve over time, leading to increasingly realistic image generation.

GANs are typically trained using a large dataset of real images. During training, the generator starts by creating random images. These are presented to the discriminator along with real images. The discriminator’s feedback helps the generator improve its creations. Over many iterations, the generator learns to make images that closely resemble the ones in the training dataset.

Other methods for image generation include e.g. Diffusion Models, and Variational Autoencoders (VAEs).

Image synthesis has a variety of practical applications:

- Entertainment and Art: GANs can generate artwork and design elements, creating novel visual content for games, films, and digital art projects.

- Style transfer: AI algorithms can be used to apply the artistic style of one image to another, enabling the creation of unique, stylized images, such as transforming a photo into a Van Gogh or Picasso-inspired painting.

- Fashion and Retail: Image synthesis can help generate images of products in various settings or on different models, saving time and resources on photoshoots.

- Medical Imaging: GANs can generate medical images to train diagnostic models without violating patient privacy.

- Deepfakes: This is a controversial use of image synthesis where AI is used to generate realistic images and videos, superimposing one person’s face onto another in videos. While there can be legitimate uses, such as in film post-production, deepfakes also raise concerns about misinformation and privacy.

- Virtual Reality: Image synthesis is used to create realistic environments in VR.

- In Machine Learning Research: Image generation is often used in data augmentation techniques to increase the amount of training data by creating transformed or synthetic images.

AI-driven image generation has the potential to revolutionize the creative process, democratize art, and inspire new, innovative applications across various industries. Despite all of this, it’s crucial to consider the ethical implications when using it, especially concerning privacy and the generation of misleading or harmful content.

3. Speech recognition

Speech Recognition, also known as Automatic Speech Recognition (ASR), is the process by which a machine learning model converts spoken language into written text. It enables computers to understand and interpret human speech, allowing for more natural and efficient human-computer interaction.

Speech Recognition typically involves several stages:

- Acoustic signal processing: The raw audio waveform is pre-processed, converting the continuous signal into a discrete sequence of acoustic features that represent the relevant characteristics of speech, such as pitch, intensity, and spectral properties.

- Acoustic modeling: A machine learning model, often a deep neural network like Long Short-Term Memory (LSTM) or Transformer, learns to map the acoustic features to phonetic units, which are the basic building blocks of speech. The model is trained on a large dataset of labeled speech recordings, with each recording corresponding to a text transcription.

- Language modeling: A statistical language model is used to estimate the probability of word sequences in the target language. This helps the Speech Recognition system to disambiguate between acoustically similar words or phrases by leveraging the context provided by surrounding words.

- Decoding: The acoustic and language models are combined to generate the most likely text transcription for the given speech input. This involves searching through the possible transcriptions to find the one that maximizes the combined likelihood from both models.

Speech Recognition has numerous applications across various domains:

- Personal assistants: Virtual assistants like Siri, Alexa, and Google Assistant use Speech Recognition to understand and respond to voice commands, making it easier for users to interact with their devices.

- Transcription services: Speech Recognition is used to convert spoken content into written text, facilitating the transcription of meetings, interviews, and lectures, or the creation of subtitles for videos.

- Accessibility: Speech Recognition can help people with disabilities, such as those with limited mobility or impaired vision, by enabling voice-controlled interfaces and converting spoken content into text for assistive technologies like screen readers.

- Call centers: Customer support services use Speech Recognition to transcribe and analyze phone calls, helping them to monitor performance, identify customer needs, and improve overall efficiency.

- Voice-controlled systems: In-car infotainment, smart home devices, and other systems can be controlled using voice commands, making them more convenient and user-friendly.

- Language translation: Combined with machine translation, Speech Recognition enables real-time translation of spoken content, facilitating communication between speakers of different languages.

4. Speech Synthesis or Text-to-speech (TTS)

Speech synthesis, also known as text-to-speech (TTS), is a field of computer science that enables a computer to reproduce human speech. It converts written input into spoken output.

How does It work?

There are different ways to implement speech synthesis, but a common modern approach involves machine learning. The basic procedure is to train a model using a large amount of speech data. The model learns to generate speech by identifying patterns in this data, including how different sounds relate to different symbols or groups of symbols in a text.

For instance, deep learning based text-to-speech services like Google’s Text-to-Speech and Amazon’s Polly use a kind of model known as a sequence-to-sequence model. These models convert input sequences (in this case, sequences of characters or phonemes) into output sequences (sequences of audio features).

A typical pipeline for TTS includes the following stages:

- Text processing: This stage converts input text into a sequence of phonemes (distinct units of sound), and also predicts prosody (rhythm, stress, and intonation).

- Acoustic feature prediction: This stage generates audio features from the sequence of phonemes.

- Audio synthesis: This stage converts the predicted audio features into actual audio.

Speech synthesis has a broad range of applications. Here are a few examples:

- Assistive technology: TTS can help visually impaired or dyslexic individuals by reading out written content. For example, screen reader software uses TTS to allow visually impaired users to hear, rather than read, text on a computer.

- Entertainment: TTS is used in video games and animations to generate dialogue, often when human voice acting isn’t feasible or cost-effective.

- Telecommunications: Many companies use TTS in their interactive voice response (IVR) systems to provide automated responses to phone calls.

- Education: Speech synthesis can aid language learning by providing accurate pronunciation of words in different languages.

- Personal Assistants: Virtual assistants like Siri, Alexa, or Google Assistant use TTS to respond to user queries with spoken output.

5. Autonomous vehicles

Machine Learning plays a crucial role in the development and functioning of autonomous vehicles, also known as self-driving cars. ML algorithms enable these vehicles to perceive their surroundings, make decisions, and navigate safely without human intervention. Multiple ML components work together in autonomous vehicles, including perception, localization, planning, and control.

- Perception: ML models, particularly Convolutional Neural Networks (CNNs), are used for processing and interpreting visual data from cameras, LiDAR, and radar sensors. These models perform tasks such as object detection, classification, and tracking to identify and monitor vehicles, pedestrians, cyclists, traffic signs, and other objects in the environment.

- Localization: ML techniques are employed to estimate the vehicle’s position and orientation in the world, relative to a pre-built map. Localization algorithms may use sensor fusion, combining data from GPS, inertial measurement units (IMUs), and visual sensors to improve the accuracy of the vehicle’s pose estimation.

- Planning: ML models are used to predict the future trajectories of other road users, enabling the autonomous vehicle to plan its path safely and efficiently. Reinforcement Learning (RL) or imitation learning methods can be employed to learn optimal decision-making policies for navigating complex traffic scenarios.

- Control: ML techniques are used to design advanced control systems that translate high-level driving commands, such as desired speed and trajectory, into low-level actuator commands for throttle, brake, and steering. These systems ensure smooth and safe vehicle operation by adapting to varying driving conditions and situations.

Autonomous vehicles are being developed and tested by several companies worldwide, such as Waymo, Tesla, Cruise, and Argo AI. Their potential applications span various domains:

- Personal transportation: Self-driving cars can provide a safer, more comfortable, and convenient mode of transportation for individuals and families, potentially reducing the number of accidents caused by human error.

- Ride-hailing services: Companies like Uber and Lyft are investing in autonomous vehicles to create self-driving fleets, offering on-demand transportation without the need for human drivers.

- Public transportation: Autonomous buses and shuttles can enhance public transit systems, improving efficiency, reducing operational costs, and providing more reliable service.

- Logistics and delivery: Autonomous vehicles can be used for goods transportation and last-mile delivery, streamlining supply chains and reducing transportation costs.

- Mobility for people with disabilities: Autonomous vehicles can provide increased independence and mobility for individuals with disabilities, making transportation more accessible and inclusive.

While significant progress has been made in the development of autonomous vehicles, several challenges remain, such as regulatory approval, public acceptance, and the ability to handle complex or unpredictable situations. However, as ML techniques continue to advance, self-driving cars are expected to become an increasingly common part of our transportation landscape.

6. Medical diagnosis

Machine Learning has made significant strides in the field of medical diagnosis, enabling more accurate, efficient, and personalized healthcare. ML models can analyze complex medical data, identify patterns, and make predictions, assisting healthcare professionals in diagnosing diseases and planning treatment strategies. Here’s how ML is used in medical diagnosis:

- Medical Imaging: ML models, particularly Convolutional Neural Networks (CNNs), excel at analyzing medical images such as X-rays, MRIs, CT scans, and ultrasound images. These models can detect and segment abnormal regions, aiding in the diagnosis of conditions such as cancer, fractures, and organ abnormalities. ML-based computer-aided diagnosis (CAD) systems can help reduce human errors and improve the accuracy and efficiency of radiologists and other specialists.

- Electronic Health Records (EHRs): ML algorithms can analyze large volumes of EHR data, including patient demographics, medical histories, lab results, and physician notes. By identifying patterns and correlations, these models can predict patient outcomes, detect anomalies, and suggest personalized treatment plans.

- Genomic Data Analysis: ML models can process and interpret genomic data to identify gene mutations, variations, and patterns linked to specific diseases. This information can be used for early detection, predicting disease progression, and tailoring treatments to individual patients based on their genetic profiles.

- Wearable Devices and Remote Monitoring: ML algorithms can analyze data from wearable devices, such as heart rate monitors and glucose sensors, to detect early signs of health issues or monitor the effectiveness of treatment plans. This allows for continuous and non-invasive patient monitoring, enabling timely interventions and better management of chronic conditions.

- Natural Language Processing (NLP): ML-based NLP techniques can be used to analyze unstructured medical data, such as clinical notes or research articles. This can help healthcare professionals quickly access relevant information, identify potential risk factors, and make more informed diagnostic decisions.

Some examples of where ML is used in medical diagnosis include:

- Detection of diabetic retinopathy in retinal images, assisting ophthalmologists in the early diagnosis and prevention of vision loss.

- Identification of cancerous tumors in mammograms or histopathological images, improving the accuracy and efficiency of cancer screening.

- Prediction of cardiovascular events based on EHR data, enabling early intervention and more effective prevention strategies.

- Detection of early signs of Alzheimer’s disease or Parkinson’s disease through the analysis of brain imaging data or speech patterns.

Machine Learning in medical diagnosis has the potential to revolutionize healthcare by enhancing diagnostic accuracy, speeding up the decision-making process, and enabling personalized medicine. However, it is essential to ensure that these models are validated, interpretable, and unbiased to minimize the risk of misdiagnosis and ensure equitable healthcare outcomes for all patients.

7. Sentiment Analysis

Sentiment Analysis, also known as opinion mining or emotion AI, is a Natural Language Processing (NLP) technique used to determine the sentiment or emotion expressed in a piece of text, such as a review, comment, or social media post. The goal is to classify the text into categories like positive, negative, or neutral, and sometimes, more fine-grained emotions like happiness, sadness, anger, or surprise.

Sentiment Analysis typically involves the following steps (although more straightforward approaches are becoming possible with Large Language Models):

- Text preprocessing: Raw text is cleaned and processed to remove irrelevant information, such as special characters and stop words. The text may also be tokenized into words or phrases and converted to a numerical representation, such as word embeddings, that can be used as input to machine learning models.

- Feature extraction: Linguistic features, such as word frequency, n-grams, or syntactic patterns, are extracted from the preprocessed text. These features capture the structure and semantics of the text, which can help in sentiment classification.

- Sentiment classification: A machine learning model, such as a deep neural network, Support Vector Machine (SVM), or Naive Bayes classifier, is trained on a labeled dataset of text samples with known sentiment labels. The model learns to recognize patterns and features associated with each sentiment category, allowing it to classify new, unseen text accurately.

There are several approaches to Sentiment Analysis, including:

- Lexicon-based methods: These techniques rely on pre-built sentiment lexicons, which are lists of words or phrases with associated sentiment scores. The sentiment of a given text is determined by aggregating the scores of the words it contains.

- Supervised learning: Models are trained on labeled datasets, where each text sample is tagged with its corresponding sentiment label. The model learns to recognize patterns and features unique to each sentiment category.

- Transfer learning: Pre-trained language models, such as BERT or GPT, are fine-tuned on a smaller sentiment analysis dataset. This allows for faster training and better performance, even with limited labeled data.

Sentiment Analysis has numerous applications across various domains:

- Customer feedback analysis: Companies use Sentiment Analysis to analyze customer reviews, comments, and social media posts to gauge customer satisfaction, identify pain points, and inform product or service improvements.

- Social media monitoring: Brands and businesses monitor social media sentiment to track public opinion, measure the impact of marketing campaigns, and manage their online reputation.

- Financial markets: Sentiment Analysis of news articles and social media discussions can help predict market trends, inform investment decisions, and detect potential risks.

- Political analysis: By analyzing public sentiment on social media or news articles, politicians and governments can gauge public opinion on policy decisions, track approval ratings, and tailor their messaging strategies.

- Customer support: Sentiment Analysis can help prioritize customer support tickets based on the sentiment expressed in the text, allowing companies to address critical issues more promptly.

By identifying and quantifying emotions in text, Sentiment Analysis provides valuable insights into human opinions and preferences, helping businesses and organizations make data-driven decisions and better understand their audience.

8. Recommender Systems

Recommender systems are an application of artificial intelligence that utilize machine learning technology to provide personalized suggestions or recommendations to users by analyzing their preferences, behaviors, and interactions. These systems aim to improve user experience, increase engagement, and boost conversion rates by providing relevant, tailored content, products, or services. Recommender systems

Recommender systems typically use one of the following approaches or a combination thereof:

- Collaborative filtering: This method leverages the collective preferences or behaviors of users to make recommendations. It can be user-based, where recommendations are generated based on the preferences of similar users, or item-based, where recommendations are based on the similarity between items that a user has interacted with.

- Content-based filtering: This approach relies on the attributes or features of items to recommend content that is similar to what a user has previously liked or interacted with. For example, a movie recommender might suggest films with similar genres, actors, or directors.

- Hybrid methods: These systems combine collaborative filtering and content-based filtering techniques to leverage the strengths of both approaches and provide more accurate recommendations.

Recommender systems work by:

- Data collection: User interaction data, such as browsing history, purchase history, ratings, or reviews, is collected from various sources.

- Data preprocessing: The raw data is cleaned and transformed into a structured format that can be used as input for ML models.

- Feature extraction: Relevant features, such as user preferences, item attributes, and interaction patterns, are extracted from the preprocessed data.

- Model training: ML models, such as clustering algorithms, matrix factorization, or neural networks, are trained on the data to learn the underlying patterns and associations between users and items.

- Recommendation generation: The trained models are used to generate personalized recommendations for each user based on their preferences, behavior, and item features.

Recommender systems are widely used across various industries and platforms:

- E-commerce: Online retailers like Amazon use recommender systems to suggest products based on users’ browsing and purchase history, improving the shopping experience and driving sales.

- Streaming services: Platforms like Netflix and Spotify use recommender systems to curate content based on users’ viewing or listening preferences, promoting user engagement and retention.

- News websites: News portals employ recommender systems to surface articles and stories that match users’ interests, encouraging them to spend more time on the site and explore more content.

- Social media: Platforms like Facebook and Instagram use recommender systems to display personalized content, such as posts, photos, or videos, in users’ feeds, increasing engagement and time spent on the platform.

By providing personalized recommendations, recommender systems enhance user experience, increase engagement, and ultimately drive better business outcomes.

9. Targeted Advertising and Personalized marketing

Targeted Advertising and Personalized Marketing are marketing strategies that leverage Machine Learning to deliver relevant and tailored content, products, or services to specific individuals or audience segments based on their interests, preferences, and behaviors. By customizing the marketing experience, these approaches aim to improve customer engagement, boost conversion rates, and increase customer lifetime value. Targeted advertising and personalized marketing are types of recommender systems.

- Targeted Advertising: This strategy involves displaying ads to users based on their demographic, geographic, psychographic, or behavioral attributes. ML models analyze vast amounts of data, such as browsing history, search queries, and social media activity, to identify patterns and predict which ads are most likely to resonate with a particular user or audience segment.

- Personalized Marketing: This approach focuses on creating customized marketing experiences by tailoring content, offers, and recommendations to individual users or customer segments. ML models, such as collaborative filtering or content-based filtering algorithms, analyze user behavior and preferences to generate personalized product recommendations, curated content, or tailored promotional offers.

Both strategies involve the following steps:

- Data collection: User data, such as demographic information, browsing history, purchase history, and social media activity, is collected from various sources, including website cookies, mobile apps, and third-party data providers.

- Data preprocessing: Raw data is cleaned, processed, and transformed into a structured format that can be used as input to ML models.

- Feature engineering: Relevant features, such as user attributes, item attributes, and interaction patterns, are extracted from the preprocessed data to capture the relationships between users and items or content.

- Model training: ML models, such as recommendation algorithms or classification models, are trained on the labeled data to learn the underlying patterns and associations between users and items or content.

- Model deployment: The trained models are used to generate personalized recommendations, ads, or content for each user or audience segment in real-time.

- Evaluation and optimization: The performance of the targeted advertising or personalized marketing campaigns is continuously monitored, and the ML models are refined and optimized to improve their effectiveness over time.

Targeted Advertising and Personalized Marketing are used across various industries and platforms:

- E-commerce: Online retailers like Amazon use personalized recommendations to suggest products based on users’ browsing and purchase history, improving the shopping experience and increasing sales.

- Streaming services: Platforms like Netflix and Spotify leverage personalized recommendations to curate content based on users’ viewing or listening preferences, encouraging user engagement and retention.

- Social media: Networks like Facebook and Instagram use targeted advertising to display relevant ads based on users’ interests, behaviors, and social connections, increasing the likelihood of user engagement and ad conversions.

- Email marketing: Businesses use personalized marketing to deliver tailored offers, promotions, or content to subscribers based on their preferences, behavior, and customer journey stage, enhancing the effectiveness of email campaigns.

- Search engines: Google and Bing use targeted advertising to display relevant ads alongside search results based on users’ search queries, location, and browsing history, increasing the relevance and click-through rates of ads.

By leveraging ML to tailor marketing experiences to individual users or audience segments, targeted advertising and personalized marketing can enhance user engagement, improve conversion rates, and ultimately drive better business outcomes.

10. Virtual Assistant

A virtual assistant is a software-based application that uses artificial intelligence and machine learning to perform various tasks, provide information, and assist users in their daily activities. Virtual assistants, such as Apple’s Siri, Amazon’s Alexa, and Google Assistant, can understand natural language input, either in text or voice form, and interact with users to answer questions, manage schedules, send messages, control smart devices, and more. By automating routine tasks and providing personalized support, virtual assistants help users save time, increase productivity, and simplify their digital experiences.

11. Voice Assistance

Voice assistance refers to a virtual assistant that enables users to interact with digital devices, such as smartphones, smart speakers, or in-car infotainment systems, using spoken commands or questions. Powered by Natural Language Processing (NLP) and Speech Recognition, voice assistants, like Siri, Alexa, and Google Assistant, are designed to understand human speech, process the user’s intent, and respond with relevant information or actions, such as answering queries, setting reminders, controlling smart home devices, or providing navigation instructions. By offering a more natural and hands-free mode of interaction, voice assistants improve the user experience and increase the accessibility of digital technologies for a broader range of users.

12. Personalization

Personalization is the process of tailoring products, services, or content to meet the specific needs, preferences, and interests of individual users or customer segments. By leveraging data, artificial intelligence, and machine learning, personalization enables businesses to deliver customized experiences that resonate with their audience, ultimately leading to increased user engagement, higher conversion rates, and improved customer satisfaction. Personalization is widely used across various industries, such as e-commerce, streaming services, and digital marketing, to enhance user experience, build stronger customer relationships, and drive business growth.

13. Natural Language Processing (NLP)

Natural Language Processing (NLP) is a subfield of artificial intelligence and linguistics that focuses on enabling computers to understand, interpret, and generate human language in a way that is both meaningful and useful. NLP encompasses various techniques and algorithms that help machines analyze, process, and derive insights from unstructured textual or spoken data.

Machine learning techniques, especially deep learning methods like recurrent neural networks (RNNs) and transformers, have significantly advanced the capabilities of NLP systems in recent years.

NLP has numerous applications across various industries and domains:

- Text generation: Text generation is a subfield of NLP that focuses on creating meaningful and coherent strings of text automatically. The aim is for a machine to produce human-like text that is contextually relevant and grammatically correct. ML models like RNNs and transformers learn patterns in human language by being trained on large corpora of text data. They predict the next word or sequence of words based on the input given and the context learned during training.

- Chatbots and virtual assistants: NLP enables chatbots and virtual assistants, such as Siri or Alexa, to understand and respond to user queries, providing support, information, and services through natural language interactions.

- Sentiment analysis: Businesses can use NLP to analyze customer feedback, reviews, or social media comments to gauge public sentiment, identify trends, and inform decision-making.

- Information retrieval: Search engines like Google use NLP techniques to better understand user queries, identify relevant content, and deliver accurate search results.

- Machine translation: NLP-powered translation services, such as Google Translate, facilitate real-time language translation, breaking down communication barriers and enabling global collaboration.

- Text summarization: NLP can be used to automatically generate summaries of news articles, research papers, or other documents, helping users quickly grasp the main points.

- Resume screening: HR departments and recruitment agencies can use NLP to automatically analyze and filter job applicants’ resumes, streamlining the hiring process and identifying the best candidates.

- Speech recognition: NLP is essential in processing spoken language, enabling applications like voice assistants, transcription services, and voice-controlled devices.

- Question-answering: Extracting or generating answers to specific questions based on a given text.

- Named entity recognition: Identifying and categorizing entities, such as people, organizations, or locations, within the text.

- Part-of-speech tagging: Assigning grammatical categories, such as nouns, verbs, or adjectives, to words in a sentence.

NLP has become an integral part of many digital technologies, enhancing user experiences, streamlining workflows, and enabling new forms of communication and information access.

14. Outlier, Anomaly, and Fraud Detection

Outlier detection, anomaly detection, and fraud detection are related techniques used to identify unusual or suspicious patterns, events, or data points within datasets. These techniques help discover instances that deviate significantly from the expected behavior or norm, often indicating errors, abnormalities, or malicious activities.

- Outlier detection: Outlier detection aims to identify data points that are significantly different from the majority of the data. Outliers can be the result of errors, natural variability, or rare events. They can provide valuable insights, indicate potential issues, or reveal new trends. Outlier detection is widely used in fields such as finance, healthcare, and manufacturing for quality control, diagnostics, and monitoring purposes.

- Anomaly detection: Anomaly detection is a broader concept that focuses on identifying unusual patterns or events that do not conform to an expected behavior. While outliers refer to individual data points, anomalies can be more complex, involving a series of data points or a combination of features. Anomaly detection is applied in various domains, including network security (detecting intrusions), industrial equipment monitoring (identifying malfunctions), and environmental monitoring (detecting unusual changes).

- Fraud detection: Fraud detection is a specific application of anomaly detection that aims to identify fraudulent activities, such as credit card fraud, insurance fraud, or cybercrimes. Fraud detection techniques analyze transaction data, user behavior, and other contextual information to identify suspicious activities that may indicate fraud attempts or financial misconduct. Businesses, banks, and financial institutions use fraud detection systems to minimize losses, protect customers, and maintain trust.

Outlier detection, anomaly detection, and fraud detection are often based on machine learning and statistical methods:

- Statistical methods: Techniques such as the Z-score, IQR (interquartile range), and Grubbs’ test can be used to identify data points that deviate significantly from the mean, median, or other measures of central tendency.

- Clustering algorithms: Unsupervised ML algorithms, such as K-means or DBSCAN, can group similar data points together and identify clusters with unusual characteristics or low-density regions that may indicate outliers or anomalies.

- Classification algorithms: Supervised ML algorithms, such as SVM or Random Forest, can be trained on labeled data to classify instances as normal or anomalous, based on their features.

- Deep learning methods: Techniques such as autoencoders and recurrent neural networks (RNNs) can learn to reconstruct or predict data, with high reconstruction or prediction errors indicating potential anomalies.

Outlier detection, anomaly detection, and fraud detection are closely related concepts that help uncover unusual or suspicious activities, enabling businesses and organizations to proactively address potential issues, protect assets, and maintain the integrity of their operations.

15. Collaborative Filtering

Collaborative filtering is a machine learning technique that focuses on making recommendations to users by analyzing their past behaviors or preferences. In this context, the algorithm identifies patterns in user behavior, such as their likes, dislikes, or ratings, and uses these patterns to make personalized suggestions. Collaborative filtering models rely on the assumption that users with similar preferences in the past will continue to have similar tastes in the future.

There are two main types of collaborative filtering: user-based and item-based. User-based collaborative filtering compares a target user with other users who have similar preferences, and recommends items favored by those similar users. On the other hand, item-based collaborative filtering identifies relationships between items based on users’ preferences, and recommends items that are similar to those the target user has already liked or interacted with. Both approaches aim to provide personalized and relevant recommendations to users, enhancing their experience and engagement with a platform or service.

16. Financial trading

Artificial Intelligence and Machine Learning are used in financial trading in several ways, offering the potential to automate and enhance the accuracy of decision-making, and even to predict market trends. Here are some key applications:

- Algorithmic Trading: ML models can be trained to conduct trades based on certain pre-defined criteria at high speeds and volumes, a practice known as algorithmic or algo-trading. These algorithms can analyze massive amounts of market data and execute trades based on patterns that they identify.

- Predictive Analytics: ML can be used to predict stock prices or market movements based on historical data and a wide array of factors such as economic indicators, company balance sheets, or news sentiment. However, it’s important to note that while these models can identify trends and provide useful insights, they cannot predict future market conditions with absolute certainty due to the inherent unpredictability and complexity of financial markets.

- Risk Management: AI and ML can help identify potential risks and anomalies in trading activities, enhancing risk management strategies. For instance, they can help identify patterns that may lead to potential market crashes or identify trades that could lead to substantial losses.

- Portfolio Management: Robo-advisors use AI to manage investment portfolios, balancing risk and reward based on an investor’s specific goals and risk tolerance. They can automatically adjust the portfolio’s composition as market conditions change or as the investor’s needs evolve.

- High-Frequency Trading (HFT): In HFT, algorithms are used to conduct a large number of trades at very fast speeds, often in the order of microseconds or milliseconds. AI and ML can be used to make high-frequency trading more efficient and profitable by rapidly processing large volumes of data and making quick trading decisions.

- Sentiment Analysis: Traders can use ML algorithms to analyze news articles, social media posts, and other forms of unstructured data to gauge market sentiment. This sentiment can then inform trading decisions, with positive sentiment possibly indicating a good time to buy and negative sentiment indicating a good time to sell.

In all these applications, the primary goal is to leverage the ability of AI and ML to process vast amounts of data quickly and accurately, something that would be almost impossible for human traders to achieve. However, it’s important to remember that while AI and ML can greatly enhance trading strategies, they are not infallible and should be used in conjunction with human oversight and sound risk management practices.

17. Video game AI

Video game AI refers to the use of artificial intelligence techniques to create autonomous entities within video games. These entities, often non-player characters (NPCs), can interact with players, the environment, and each other in complex and interesting ways. Video game AI can greatly enhance the realism, immersion, and overall gameplay experience. Here are some of the main ways AI is used in video games:

- Behavioral AI: This is used to govern the actions of NPCs in the game. For instance, in a first-person shooter game, the AI might control enemy soldiers, determining when they should take cover, when they should attack, and what tactics they should use. This often involves decision-making algorithms and state machines to switch between different behaviors based on the current situation.

- Pathfinding: Pathfinding algorithms, such as A* or Dijkstra’s algorithm, are used to navigate the game world. These algorithms help NPCs find the shortest or most efficient route from point A to point B, avoid obstacles, and navigate complex terrain.

- Procedural Content Generation: AI can be used to automatically generate game content, such as levels, quests, or even entire worlds. This can add a huge amount of replayability to a game, as each playthrough can offer new, dynamically-generated content.

- Machine Learning: More recently, machine learning techniques have started to be used in video games. For example, reinforcement learning, where an AI learns by trial and error, can be used to train NPCs to perform complex tasks or develop challenging, adaptive behaviors.

- Player Modeling: AI can also be used to model player behavior, learning from the actions that a player takes in the game. This can be used to adjust the game’s difficulty, tailor the game experience to suit the player’s style, or even predict what the player will do next.

- Dialogue Systems: AI can be used to create dynamic dialogue systems, where NPCs can have natural-sounding conversations with players or react verbally to in-game events. This often involves natural language processing and generation techniques.

It’s important to note that the goal of video game AI is often different from other forms of AI. In many cases, the aim is not to create the most efficient or “intelligent” AI, but rather to create an AI that contributes to a fun, challenging, and engaging gameplay experience. For this reason, video game AI often involves a careful balance of predictability and unpredictability, challenge and fairness.

18. Drug discovery

Artificial Intelligence and Machine Learning have brought significant innovations to the field of drug discovery, expediting the process and increasing the chance of finding effective drugs. Here are some ways in which AI and ML are used in drug discovery:

- Target Identification: AI algorithms can analyze vast amounts of biological data to identify potential drug targets, such as specific proteins or genes that are implicated in a disease. These targets can then be used as a starting point for the development of new drugs.

- Predictive Modeling: ML models can predict how a potential drug will interact with its target, based on the drug’s chemical structure and the target’s biological characteristics. This helps researchers assess whether a potential drug is likely to be effective before it is tested in the lab.

- Drug Repurposing: AI can be used to find new uses for existing drugs. By analyzing the properties of a drug and its effects on various biological targets, AI can identify potential new applications for a drug, a process that is often faster and cheaper than developing a new drug from scratch.

- Personalized Medicine: ML algorithms can analyze patient data, such as genetic information or disease history, to predict how a patient will respond to a particular drug. This can lead to more personalized and effective treatment strategies.

- Virtual Screening: AI can be used to virtually screen millions of compounds to identify those that are most likely to be effective as drugs. This can significantly speed up the early stages of drug discovery, reducing the need for laborious and costly laboratory tests.

- Synthetic Biology: AI and ML can assist in designing synthetic biological systems, like new enzymes or metabolic pathways, for drug production. This can lead to more efficient and sustainable manufacturing processes for drugs.

- Clinical Trials: AI can help in designing more efficient clinical trials, identifying suitable participants, and monitoring the effects of the drug during the trial. AI can also analyze the results of clinical trials to detect patterns and correlations that may not be apparent to human researchers.

AI and ML applications in drug discovery can dramatically reduce the time, cost, and failure rate associated with traditional drug discovery methods. However, it’s important to note that while AI can aid and accelerate many aspects of drug discovery, it does not replace the need for laboratory experiments and clinical trials, which are essential for confirming the safety and efficacy of new drugs.

19. Weather forecasting

Artificial Intelligence and Machine Learning have been increasingly employed in weather forecasting to enhance the accuracy and efficiency of predictions. These technologies can process vast amounts of data and recognize patterns faster and more effectively than traditional methods. Here are some ways AI and ML are used in weather forecasting:

- Data Analysis and Pattern Recognition: AI and ML algorithms can analyze vast amounts of historical weather data and identify patterns and trends. This analysis can help forecast future weather conditions based on similar patterns from the past. Techniques such as regression, decision trees, and neural networks are commonly used for this purpose.

- Predictive Modeling: Machine learning models can be trained on historical weather data to predict future conditions. These models can take into account a wide array of factors, such as temperature, humidity, wind speed, and atmospheric pressure, and predict how these factors will change over time.

- Image Analysis: AI can analyze satellite images and radar data to detect specific weather patterns or phenomena, such as hurricanes, storm fronts, or areas of high precipitation. Convolutional neural networks (CNNs), a type of deep learning model, are especially effective for image analysis tasks.

- Ensemble Forecasting: ML can be used to combine multiple different weather models into a single, more accurate forecast. This approach, known as ensemble learning, can help account for the inherent uncertainty and variability in weather predictions.

- Climate Modeling: AI and ML can also aid in climate modeling, which involves long-term weather predictions and can help scientists understand and predict the impacts of climate change.

- Nowcasting: This refers to the task of predicting weather conditions in the very short term, typically a few hours ahead. Machine learning can analyze real-time weather data and rapidly produce accurate nowcasts.

- Personalized Weather Forecasts: ML algorithms can provide personalized weather forecasts based on a user’s specific needs and activities. For example, a forecast for a runner might focus on temperature and air quality, while a forecast for a farmer might prioritize precipitation and wind speed.

While AI and ML can significantly improve the accuracy of weather forecasts, it’s essential to note that weather is inherently unpredictable, and no forecast can be 100% accurate. However, with the continuous advancements in these technologies, forecasts are becoming increasingly reliable, helping us prepare for various weather conditions more effectively.

20. Predictive maintenance

Artificial Intelligence and Machine Learning are critical technologies for predictive maintenance, a strategy aimed at predicting when an in-service machine will require maintenance. The key objective is to minimize the unexpected downtime and reduce the costs associated with unnecessary maintenance.

ML models are trained using historical and real-time data collected from various sensors embedded in machinery. These data include temperature, vibration, pressure, and other relevant metrics that help understand the machine’s performance and health. ML algorithms analyze these data to identify patterns or anomalies that could indicate a potential failure.

AI, more specifically, can integrate these machine-specific insights with other information, like maintenance records or operational schedules, to predict the optimal time for maintenance. This allows businesses to plan maintenance activities proactively, leading to improved operational efficiency, extended machinery life, and reduced costs.

In fields like manufacturing, aviation, and transportation, where equipment reliability is critical, the use of AI and ML for predictive maintenance has become increasingly prevalent. Despite the complexity and the need for large amounts of data, the benefits of predictive maintenance make it a promising application of AI and ML.

21. Robot control

Artificial Intelligence and Machine Learning play a vital role in robot control, enhancing the autonomy, adaptability, and efficiency of robotic systems.

In the realm of autonomy, AI and ML can help robots perform complex tasks without continuous human supervision. For instance, reinforcement learning, a type of ML, can allow a robot to learn from its interactions with the environment, improving its ability to navigate, pick up objects, or perform other tasks over time.

ML algorithms can also enable robots to adapt to changing environments or circumstances. For example, a robot can use ML to recognize and adapt to different surface types or obstacles, improving its mobility and effectiveness.

In addition, AI can be used for higher-level planning and decision-making in robotic systems. For instance, AI could help a robot plan the most efficient route to complete a task or decide when to switch between different activities based on its current context and goals.

Therefore, AI and ML are transforming robot control, making robots more versatile, intelligent, and capable of performing complex tasks in various fields, from manufacturing to healthcare, and beyond.