Learn the necessities of model management with our guide. This article navigates through the methodical waters of saving your trained Scikit-Learn classifiers using two separate libraries: Pickle and Joblib. Anticipate a deep dive into the pros, cons, and how-tos of each method, ensuring your models are not only securely stored but also readily accessible for future predictions, sharing, or further analytical adventures. Whether you’re a seasoned data scientist or a budding analyst, unravel the threads of efficient and effective model storage and retrieval without the retraining hustle, ensuring your computational endeavors are both sustainable and shareable!

If you only want the quick and easy answer in 10 seconds, here it is:

Saving a model in Scikit-Learn using Pickle:

classifier = ... # Create and train a classifier

import pickle

# Save the model to disk

with open('saved_model.pkl', 'wb') as file:

pickle.dump(classifier, file)

Loading the same model:

import pickle

# Load the model from disk

with open('saved_model.pkl', 'rb') as file:

loaded_classifier = pickle.load(file)

# Use the loaded model to make predictions on new data

predictions = loaded_classifier.predict(...)

Table of contents:

- How to Save a Classifier to Disk: Introduction to Model Preservation

- How to load a model from disk

- Pros and Cons of pickle and Joblib

- Considerations and Best Practices for Model Management

- Common Issues and Tips for Model Management in Scikit-learn

1. How to Save a Classifier to Disk: Introduction to Model Preservation

In the realm of data science and machine learning, creating a model is merely a part of the journey. Once a model is trained, tested, and fine-tuned to provide desirable predictions or classifications, preserving it for future use becomes imperative. Particularly, saving a model to disk enables developers and data scientists to utilize it across different projects, share it with peers, or deploy it into production, all without the necessity to retrain it – a process that can be computationally expensive and time-consuming.

The task of saving a model, also known as model serialization, encapsulates the model into a format suitable for storage or transmission. In the context of Scikit-Learn, a popular library in Python for machine learning, models can be effectively serialized and saved to disk using various methods, ensuring that the fruits of your computational labor are securely stored and effortlessly retrievable when needed.

In the forthcoming sections, we will learn techniques for saving a Scikit-Learn classifier, delving into methods using both Pickle and Joblib, popular libraries in Python for object serialization.

Step 1: Train a Classifier

Before we get to saving a model, let’s train a simple classifier using Scikit-Learn. Below is a basic example where we’ll use the famous Iris dataset to train a decision tree classifier.

from sklearn import datasets from sklearn.tree import DecisionTreeClassifier # Load the iris dataset iris = datasets.load_iris() X = iris.data y = iris.target # Create and train a decision tree classifier classifier = DecisionTreeClassifier() classifier.fit(X, y)

In the code snippet above:

datasets.load_iris()DecisionTreeClassifier()fit(X, y)

Saving a Scikit-Learn Model to Disk Using Pickle

Pickle is a module in Python standard library that allows objects to be serialized to a byte stream and de-serialized back. In the context of Scikit-Learn, serializing a model (or classifier) means converting it into a format that can be stored or transferred, and subsequently, de-serializing it means recovering the original model from the stored format. Here’s a straightforward guide on how to use Pickle to save your Scikit-Learn classifier to disk.

Step 2: Ensure Availability of Pickle

Python’s Pickle module should be available by default as it is part of the standard library. There’s typically no need to install it separately. You can simply import it to get started:

import pickle

Step 3: Save the Classifier using Pickle

Once we have a trained model, we can use Pickle to save it to disk. Here’s how we can do this:

import pickle

# Save the model to disk

with open('saved_model.pkl', 'wb') as file:

pickle.dump(classifier, file)

Explanation:

open('saved_model.pkl', 'wb') as filesaved_model.pklwbpickle.dump(classifier, file)

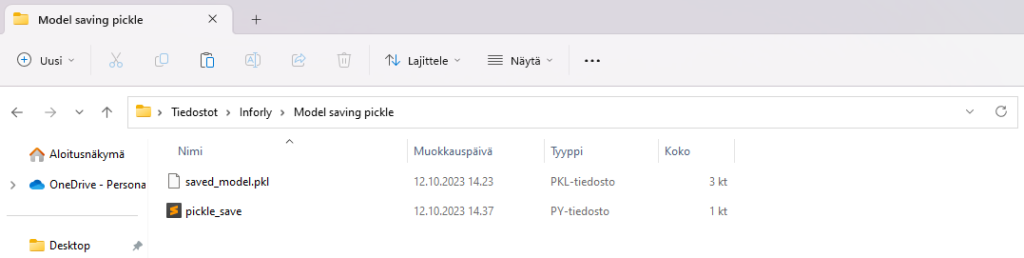

If you have saved the aforementioned code to a file called “pickle_save.py“, and then run the code in this file, you should now have the following files in your folder:

As you can see, the trained classifier is now saved in the file “saved_model.pkl“.

Now we have learned how to train a basic decision tree classifier using Scikit-Learn and save it to disk using Pickle. The saved model can be later loaded and utilized to make predictions, ensuring that you can effectively store and transport your machine learning models for future use. In subsequent sections, we’ll explore how to load the saved model back into your Python environment.

Let’s next check out how to do the same thing, but with a different library called Joblib:

Saving a Scikit-Learn Model to Disk Using Joblib

While Pickle provides general utilities for serialization in Python, joblib is particularly optimized for efficiently storing large numpy arrays, making it more suitable for models that need to store a large amount of data, which is often the case with Scikit-Learn models.

Alternative Step 2: Ensure the Availability of Joblib

Before utilizing joblib, ensure that it is installed in your Python environment. You can do this through the command line using the Python package installer pip.

For a direct check, you might use pip show followed by the package name to get information about that specific package, if installed:

pip show joblib

If joblib is installed, this command will display a summary, including the version number and other details about the package. If it’s not installed, there will be no output, and you can install it using pip:

pip install joblib

Alternative Step 3: Save the Classifier using Joblib

Once joblib is installed, we can proceed to save our previously trained classifier (as per the example in the previous section) using joblib. Below is a simple example:

from joblib import dump # Save the model to disk dump(classifier, 'classifier_model.joblib')

Explanation:

dump(classifier, 'classifier_model.joblib')classifierclassifier_model.joblib

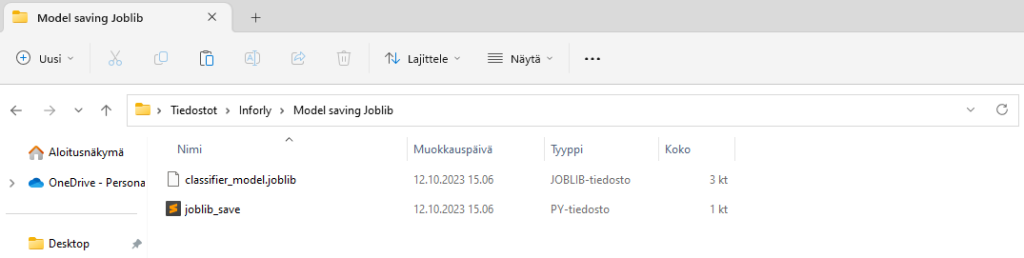

If you have saved the aforementioned code to a file called “joblib_save.py

As you can see, the trained classifier is now saved in the file “classifier_model.joblib“.

Note on Efficiency: Joblib is often more efficient than Pickle when dealing with large numpy arrays (common in Scikit-learn models) because it stores them in a binary format and doesn’t need to serialize them. The reduction in serialization time and disk space usage can be quite significant with large models.

Similar to Pickle, when using Joblib to save models, it’s advisable to be wary of compatibility issues, especially when sharing models between different platforms or Python versions.

Joblib offers an efficient alternative to Pickle for saving Scikit-learn models, particularly when they contain large numpy arrays. Utilizing joblib.dump()

2. How to load a model from disk

This section provides a concise guide to retrieving and utilizing stored machine learning models using Pickle and Joblib. Bridging developmental and applicative phases, we will explore the essentials of loading and deploying saved Scikit-Learn models, ensuring optimal resource use and seamless transition between model storage and implementation.

Loading a Scikit-Learn Model from Disk Using Pickle

Introduction

Having discussed the nuances of saving a Scikit-Learn classifier to disk utilizing Pickle in the previous sections, our journey now delves into its counterpart: loading the saved model back into the Python environment. This step is crucial to re-utilize the trained model for predictions, further training, or analysis without undergoing the potentially taxing process of re-training it. Let’s dive into the step-by-step procedure for loading a classifier using Pickle.

Step 1: Load the Classifier

To load the saved model, we need to read the stored byte stream from the file and deserialize it back into a Python object. Here’s how this can be done:

import pickle

# Load the model from disk

with open('saved_model.pkl', 'rb') as file:

loaded_classifier = pickle.load(file)

Explanation:

open('saved_model.pkl', 'rb') as fileopen()saved_model.pkl‘, in read-binary mode ('rb'as filefilepickle.load(file)loaded_classifier

Note on Compatibility and Security:

Ensure that the model is loaded with the same version of Scikit-Learn with which it was saved to prevent incompatibilities. Moreover, it is imperative to note that pickle.load()

Step 2: Utilizing the Loaded Classifier

Once the classifier is loaded, it can be utilized just like the original trained model for making predictions or further training:

# Example: Use the loaded model to make predictions (random features) predictions = loaded_classifier.predict([[5.1, 3.5, 1.4, 0.2]]) print(predictions)

Here, loaded_classifier.predict()[5.1, 3.5, 1.4, 0.2]

[0]Loading a Scikit-Learn Model from Disk Using Joblib

Having explored the serialization and storage of a Scikit-Learn classifier using Joblib in previous discussions, it is equally crucial to master the art of deserialization – reanimating a stored model back into the computational environment for subsequent use, be it predictions, further training, or analyses. In this segment, we shall delve into the detailed steps and considerations involved in loading a model with Joblib, ensuring that your stored classifiers are readily available whenever they are called upon.

Step 1: Load the Classifier

Loading a model that was saved with Joblib involves reading the file from disk and deserializing it back into a Python object. Below is a simple guide:

from joblib import load

# Load the model from disk

loaded_classifier = load('classifier_model.joblib')

Explanation:

load('classifier_model.joblib')classifier_model.joblib‘. The resulting object, which is our trained classifier, is assigned toloaded_classifier

Note on Compatibility and Security:

Ensure to use the same version of Scikit-Learn and related dependencies while loading the model as were used during saving to prevent potential issues related to model compatibility. In terms of security, while Joblib does not execute arbitrary code during loading, like Pickle, it’s always wise to load models only from trusted sources to maintain the integrity of your applications and data.

Step 3: Employ the Loaded Classifier

The loaded classifier, now residing in loaded_classifier

# Example: Making predictions using the loaded classifier (random features) predictions = loaded_classifier.predict([[5.1, 3.5, 1.4, 0.2]]) print(predictions)

In this instance, loaded_classifier.predict()[5.1, 3.5, 1.4, 0.2]

[0]3. Pros and Cons of pickle and Joblib

Pickle and Joblib present two fundamental approaches for saving and loading Scikit-Learn machine learning models. While both offer convenience and are widely utilized, they exhibit distinctive advantages and downsides which cater to varying use-case scenarios.

Pickle

Advantages:

- Universality: Being Python’s intrinsic object persistence mechanism, pickle has the ability to serialize a broad range of Python objects.

- Ease of Use: With its incorporation into Python and a straightforward API, pickle is user-friendly and requires no additional installations.

Disadvantages:

- Efficiency: Pickle does not excel in efficiency when dealing with objects heavily reliant on NumPy arrays, a common scenario in scikit-learn.

- Security Concerns: Pickle poses security risks as it can execute arbitrary code during loading. Always be wary of unpickling data from unverified sources.

- Compatibility Issues: Pickle lacks compatibility assurance between different Python versions, sometimes hindering the interchangeability of pickle files among them.

Joblib

Advantages:

- Efficiency: Tailored for objects utilizing NumPy data structures, joblib presents a more efficient alternative to pickle in contexts involving sizable NumPy arrays.

- Disk Usage: Joblib is adept at managing disk usage, particularly with large NumPy arrays, often outperforming pickle in this regard.

- Compressed Serialization: Joblib provides built-in functionalities for compressing serialized objects, aiding in further disk space conservation.

Disadvantages:

- Scope: Joblib, while excellent for large NumPy arrays, may not be as universal as pickle for other object types.

- Installation: Joblib requires a separate installation as it is not an in-built Python feature.

- Compatibility: Similar to pickle, joblib does not ensure backward compatibility, causing potential issues when attempting to load a model saved with a different version.

Note on Security: Regardless of the method, never unpickle/unload data from untrusted sources due to the risk of executing malicious code.

In summation, while Pickle and Joblib both offer valuable functionalities for serializing Python objects, the specific use-case, data structure characteristics, and model deployment contexts should dictate the optimal choice. Special attention should also be paid to maintaining security and facilitating future usability when saving and sharing models.

4. Considerations and Best Practices for Model Management

- Metadata Management: Preserving additional metadata such as training data, Python source code, version details of scikit-learn and dependencies, and cross-validation scores is imperative. This facilitates verifying consistency in performance metrics and supports rebuilding models with future scikit-learn versions.

- Deployment: Often, models are deployed in production environments using containers (e.g., Docker) to encapsulate and preserve the execution environment and dependencies, enhancing portability and mitigating issues related to version inconsistencies.

- Optimization Through Data Type Selection: Opt for efficient data types to minimize your models’ size. For example, using 32-bit or 16-bit data types in place of 64-bit ones, when applicable, can reduce memory usage without sacrificing performance, thus improving load times and operational efficiency.

- Implement Model Optimization Strategies: Explore techniques such as model pruning and quantization to create more efficient models. Pruning involves removing non-essential weights, while quantization decreases parameter precision, both of which can reduce model size and improve loading times without substantially impacting performance.

- Employ Compression Techniques for Models: Utilize libraries like joblib and pickle to compress your models when storing them, significantly minimizing the required disk space. This is especially valuable for large models or in scenarios where storage space is a crucial factor.

- Employ Parallel Loading: When dealing with large or multiple models, parallel loading can be an effective strategy to minimize loading time. The feasibility of this approach is subject to your system’s I/O capabilities.

- Take Advantage of Hardware Accelerators: Ensure that your models are configured to leverage hardware accelerators like GPUs or TPUs, if available. Doing so can markedly enhance loading speeds and training and prediction times by utilizing the specialized resources offered by these devices.

- Emphasis on Version Control and File Management: Effectively managing versions and files is essential to streamline development and deployment processes. Implement version control systems to track changes and manage different iterations of your models, ensuring reproducibility and efficient collaboration among developers.

- Ensuring Model Security: It’s important to safeguard your models, especially when they contain sensitive data or are proprietary. Employ encryption for your model files to obstruct unauthorized access and always adhere to prevalent security protocols when sharing your models.

These points offer a coherent and comprehensive approach to model management, encompassing security, efficiency, and operational effectiveness.

5. Common Issues and Tips for Model Management in Scikit-learn

Handling Version and Compatibility Issues

- Scikit-learn Updates: Test and, if necessary, retrain and save models using the updated version of scikit-learn post-upgrade to avoid compatibility issues.

- Inconsistent Versions Warning: When an estimator, pickled with a certain scikit-learn version, is unpickled with a different version, an

InconsistentVersionWarningis raised. Ensure consistent version usage to mitigate this issue. Here’s an example of handling such a warning:

from sklearn.exceptions import InconsistentVersionWarning

import warnings, pickle

warnings.simplefilter("error", InconsistentVersionWarning)

try:

est = pickle.loads("model_from_prevision_version.pickle")

except InconsistentVersionWarning as w:

print(w.original_sklearn_version)

- Large File Sizes and compression: Manage and minimize storage issues and slow loading times by compressing models using joblib or pickle, which can notably reduce file sizes. Prefer using joblib for saving and loading scikit-learn models due to its efficiency with NumPy data structures.

- Custom Functions or Objects: If your model incorporates custom functions or objects that are vital for model deserialization, ensure that those are defined or imported in the same scope when loading a model. Alternatively, upon modifying custom functions or objects, check compatibility and retrain the classifier if needed, ensuring no adverse impacts on saved models.

Managing Model Changes

- Version Control: Employ systems like Git or Weights & Biases Artifacts for tracking model changes, enabling easy rollbacks and maintaining consistency.

- Metadata Management: Log comprehensive records of hyperparameters, preprocessing steps, and metrics. This can be done using tools like the Weights & Biases Artifacts API for example.

Finalizing Machine Learning Models: Key Considerations

- Python Version: Ensure the Python version is consistent during serialization and deserialization of models.

- Library Versions: Maintain consistent versions of all major libraries, including NumPy and scikit-learn, during model deserialization.

- Manual Serialization: Optionally, manually output model parameters for use in scikit-learn or other platforms, providing control and simplicity for implementing algorithms used in predictions.

Incorporating these practices aids in effective model management and promotes consistent and reproducible machine learning project outcomes.